Introduction

This long-winded blog posts puts forward our technical assessment of an IP CLOS solution which is not depended to vendor proprietary solutions. Our goal is to understand the maturity of the equipment and understand how this technology may interoperate with our carrier network.

The bleeding edge topic of today is configuring EVPN for Ethernet multipoint services over MPLS networks. Unfortunately Juniper QFX5100 switches do not support EVPN-MPLS at the time this post is published (September 2016). Instead in this LAB we will be using VXLAN as the data plane encapsulation that will allow us to deliver Ethernet services in the Data Center, as one alternative to Q-Fabric solutions. Such encapsulation option for EVPN overlay is briefly described in this Internet-Draft.

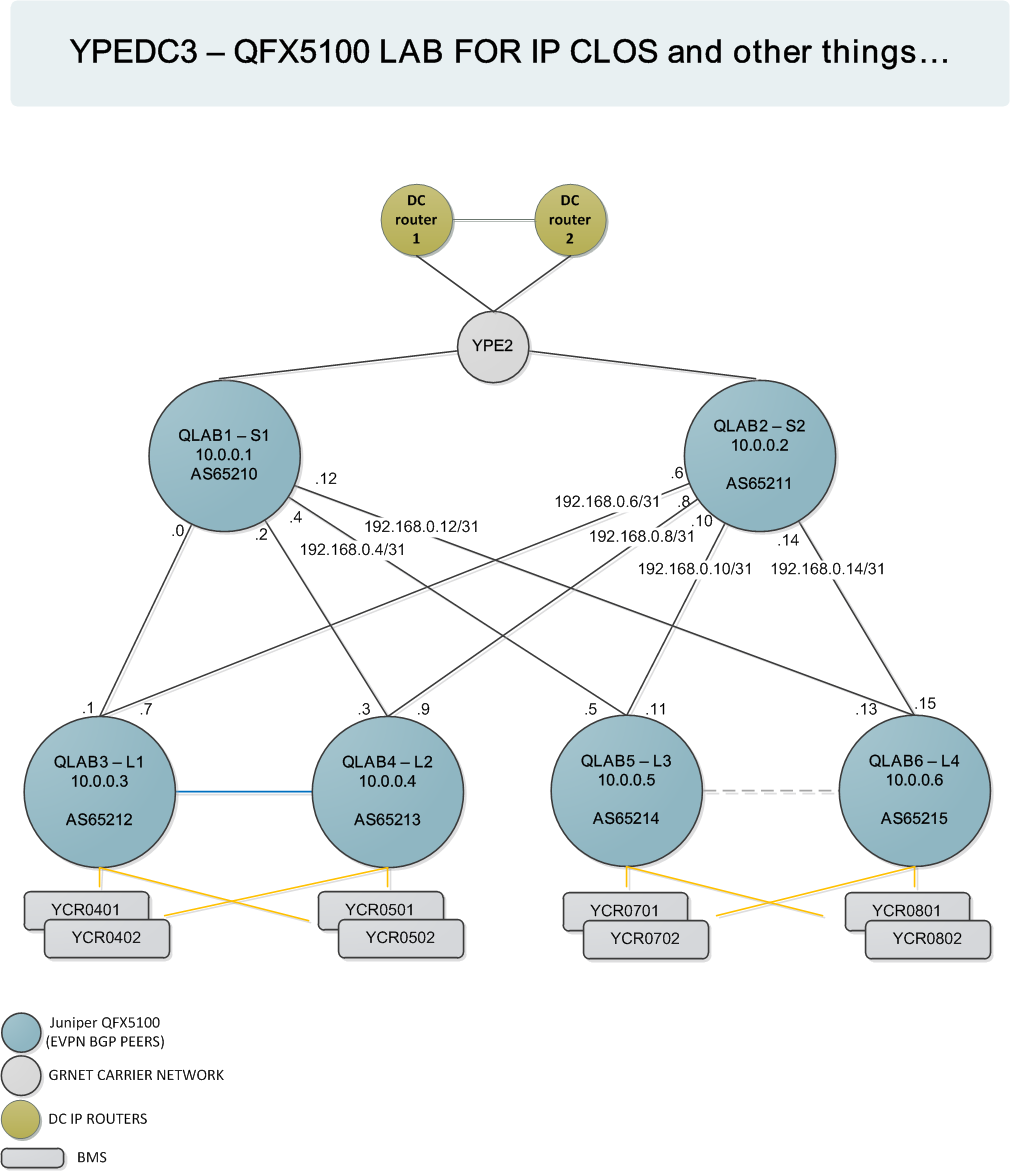

The topology used in the lab is based on CLOS network topology that allows for scalability on building an IP fabric over which the Layer-2 network can connect multiple server ports... All inter-fabric connections between leaf and spine switches are 40G Ethernet.

The lab topology is as follows:

One important detail that is specific to our lab is that since all devices are QFX5100, L3 is provided by our data center routers. Essentially making all lab devices leaf switches. In a production IP Clos topology typically Layer 3 is configured on Spine devices mainly due to limitations of the leaf switches to support inter-VLAN routing.

Also there are 2 links between qlab3 and 4 and qlab5 and 6. Both are implemented for testing purposes and also to be able to test active/active scenarios based on virtual chassis with two ToR members.

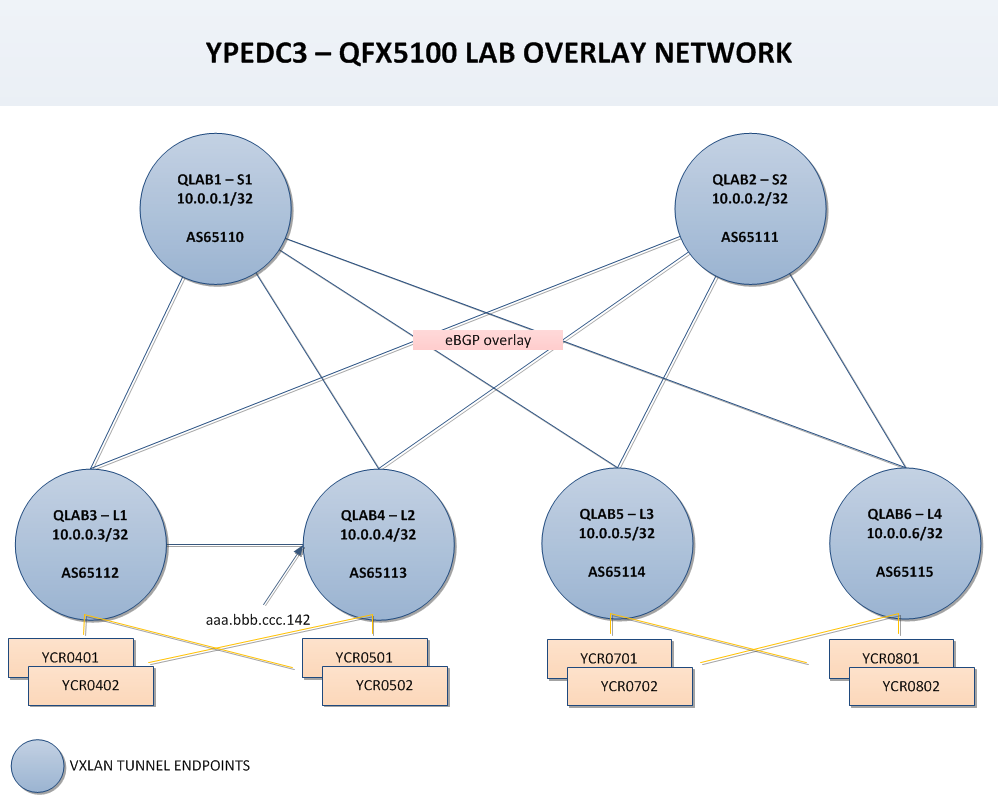

VXLAN is used as an overlay technology providing a Layer-2 network over a routed Layer-3 underlay network by using MAC address in IP UDP tunneling encapsulation (MAC in IP/UDP). The initial RFC7348 defined a multicast based flood-and-learn VXLAN without a control plane, in order to enable MAC address learning. The alternative to using multicast, in a controller-less overlay, is to use EVPN as the control plane protocol. The EVPN control plane provides VTEP peer discovery and end-host reachability information distribution, while it also introduces new features like redundant GW. Using extended communities like route targets and route distinguishers each VTEP can exchange MAC addresses with other VTEPs that are interested in a specific VXLAN Network Identifier (VNI).

Inter VXLAN routing cannot be handled by QFX5100 devices since the Broadcom chipset does not support routing of VXLAN traffic (p.17 above image). However, in a production environment the spine switches would typically be either QFX10K, EX9200 of MX series routers.

Overhead

The overhead in this solution sums up to a total of 496 bits that is derived from the following additional overheads to the original Layer-2 frame.

- 64 bits VXLAN Header

- 64 bits Outer UDP Datagram

- 160 bits Outer IP Header

- 208 bits Outer Ethernet Header

Inter Fabric links

All links have maximum MTU: 9216 and are configured as follows:

##########

### LAB-3

##########

et-0/0/48 {

description "to lab1 - spine#1";

mtu 9216;

unit 0 {

family inet {

address 192.168.0.1/31;

}

}

}

et-0/0/49 {

description "to lab2 - spine#2";

mtu 9216;

unit 0 {

family inet {

address 192.168.0.7/31;

}

}

}

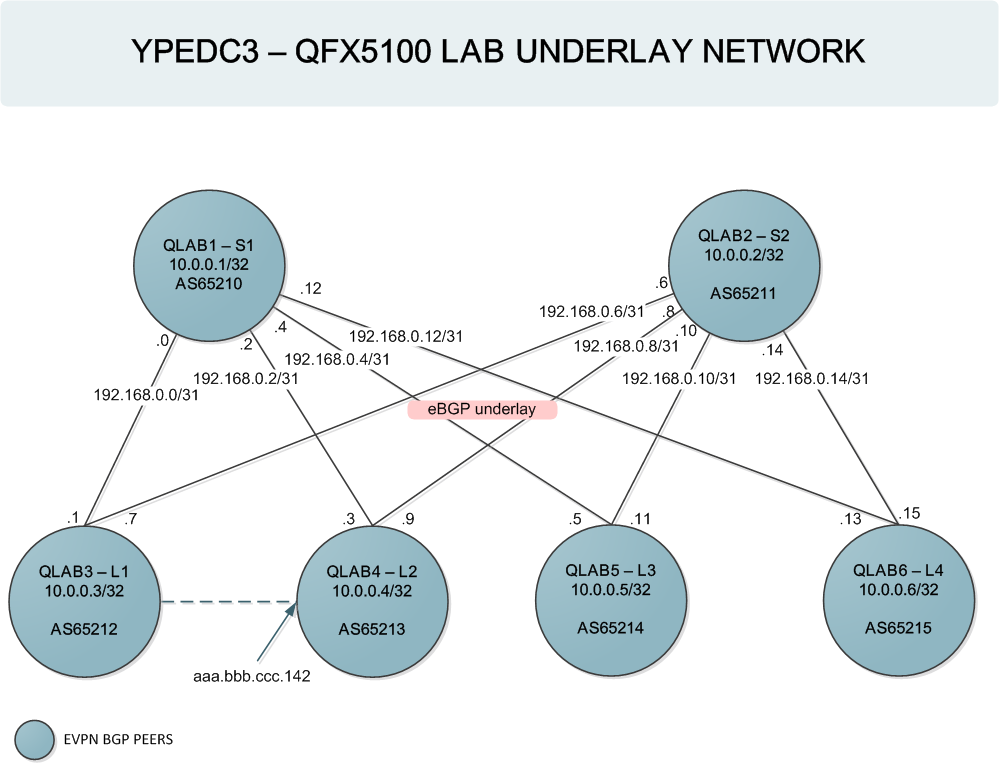

Underlay Network Configuration

We will first build the routed Layer-3 - underlay - network that is responsible for VTEP peer discovery and end-host reachability information distribution between the VXLAN Tunnel endpoints. Since in our topology there are multiple paths we need a load balancing policy:

user@lab-3.grnet.gr# show routing-options

forwarding-table {

export load-balance-per_flow;

}

In typical Juniper configuration per-packet here means actually per-flow.

user@lab-3.grnet.gr# show policy-options policy-statement load-balance-per_flow

then {

load-balance per-packet;

}

There are a lot of alternatives in implementing the underlay network with BGP. In this example multipath is achieved because all peers are in different AS Numbers and external BGP inherently supports multipath. Each QFX5100 is assigned its own AS Number and eBGP sessions are initiated over the /31 point-to-point links with multipath enabled. The import policy includes all direct routes that are part of the 10.0.0.0/24 subnet.

protocols {

##

## Warning: requires 'bgp' license

##

bgp {

log-updown;

graceful-restart;

group underlay {

type external;

mtu-discovery;

import accept-all;

export bgp-underlay-out;

bfd-liveness-detection {

minimum-interval 350;

multiplier 3;

session-mode single-hop;

}

multipath multiple-as;

neighbor 192.168.0.0 {

description "lab1 - SPINE1";

peer-as 65110;

local-as 65112;

}

neighbor 192.168.0.6 {

description "lab2 - SPINE2";

peer-as 65111;

local-as 65112;

}

}

Overlay Network Configuration

The overlay network is using the loopbacks learned from the previous step from the underlay fabric. The eBGP peering are configured with eVPN signaling enabling the relevant NLRI. Since the sessions are on loopbacks, multihop is configured. No-nexthop-change is not actually needed in our topology but is left as is in case we need to experiment further with BGP.

In the LAB topology we need a full mesh BGP since all devices are actually leaf switches that participate in the Layer-2 network delivering the same VLAN id from YPE2 down to the server ports. In production network Spine switches do not need to be a part of the overlay network.

group overlay {

type external;

multihop {

ttl 255;

no-nexthop-change;

}

local-address 10.0.0.3;

family evpn {

signaling;

}

neighbor 10.0.0.4 {

description leaf-2;

peer-as 65213;

}

neighbor 10.0.0.5 {

description leaf-3;

peer-as 65214;

}

neighbor 10.0.0.6 {

description leaf-4;

peer-as 65215;

}

neighbor 10.0.0.1 {

description spine-1;

peer-as 65210;

}

neighbor 10.0.0.2 {

description spine-2;

peer-as 65211;

}

}

EVPN is configured with VXLAN for data plane encapsulation. In the extended-vni list for the LAB purposes we have included all VNIs so we don’t have to explicitly allow each new VNI. In VNI options the route targets for each VNI are assigned independently. BUM traffic is also handled by EVPN by configuring ingress replication.

{master:0}[edit]

user@lab-3.grnet.gr# show protocols evpn

vni-options {

vni 481 {

vrf-target export target:1:481;

}

vni 600 {

vrf-target export target:1:600;

}

}

encapsulation vxlan;

extended-vni-list all;

multicast-mode ingress-replication;

Next, we move on to the switch-options configuration. The source interface for our VTEPs is defined as the loopback. We need to define the route-distinguisher that will be included in EVPN NLRIs over BGP. The import policy for the BGP sessions is defined by allowing certain extended communities advertised by our eBGP peers. The communities are assigned for each VXLAN independently. The vrf-target is not actually needed in our LAB but in theory it will, be the community with which the switch will send all ESI (Type 1).

{master:0}[edit]

user@lab-3.grnet.gr# show switch-options

vtep-source-interface lo0.0;

route-distinguisher 10.0.0.3:1;

vrf-import underlay-import;

vrf-target {

target:7777:7777;

auto;

}

{master:0}[edit]

user@lab-3.grnet.gr# show policy-options policy-statement underlay-import

term VXLAN600 {

from community VXLAN600;

then accept;

}

term VXLAN481 {

from community VXLAN481;

then accept;

}

term reject {

then reject;

}

VXLAN configuration

Things are a bit simple here; the VXLAN configuration is under the VLAN configuration stanza, BUM traffic handling is given to the overlay network by configuring VXLAN ingress-node-replication instead of multicast overlay.

{master:0}[edit]

user@lab-3.grnet.gr# show vlans

PROVISIONING {

vlan-id 481;

##

## Warning: requires 'vcf' license

##

vxlan {

vni 481;

ingress-node-replication;

}

}

SERVER_PRIVATE_LAN1 {

vlan-id 600;

##

## Warning: requires 'vcf' license

##

vxlan {

vni 600;

ingress-node-replication;

}

}

After configuring a new VXLAN we will have to assign an extended community to be included in the EVPN advertisements.

{master:0}[edit]

user@lab-3.grnet.gr# show policy-options policy-statement underlay-import

term VXLAN600 {

from community VXLAN600;

then accept;

}

term VXLAN481 {

from community VXLAN481;

then accept;

}

term reject {

then reject;

}

Interface Configuration

Access Port

This is a typical Juniper configuration:

{master:0}[edit]

user@lab-3.grnet.gr# show interfaces xe-0/0/1

description VMC-04-01-RED;

ether-options {

auto-negotiation;

}

unit 0 {

family ethernet-switching {

interface-mode access;

vlan {

members PROVISIONING;

}

}

}

Trunk port

Trunk port configuration works as expected. We configured a number of VLANs and issued some ICMP traffic from server 05-01 to server 07-01.

On all cases mac address learning on the Ethernet switching table was working and also EVPN NLRIs where exchanged between lab-{1,6}.

{master:0}[edit]

user@lab-4.grnet.gr# show groups VIMA-LAB

interfaces {

<*> {

mtu 9022;

ether-options {

auto-negotiation;

}

unit 0 {

family ethernet-switching {

interface-mode trunk;

vlan {

members [ PROVISIONING SERVER_PRIVATE_LAN1 vm-vlan-101 vm-vlan-102 vm-vlan-103 vm-vlan-104 vm-vlan-105 ];

}

}

}

}

}

{master:0}[edit]

user@lab-4.grnet.gr# show interfaces xe-0/0/1

apply-groups VIMA-LAB;

description VMC-05-01-RED;

{master:0}[edit]

user@lab-4.grnet.gr# run show ethernet-switching interface xe-0/0/1

Routing Instance Name : default-switch

Logical Interface flags (DL - disable learning, AD - packet action drop,

LH - MAC limit hit, DN - interface down,

SCTL - shutdown by Storm-control,

MMAS - Mac-move action shutdown, AS - Autostate-exclude enabled)

Logical Vlan TAG MAC STP Logical Tagging

interface members limit state interface flags

xe-0/0/1.0 294912 tagged

PROVISIONING 481 294912 Forwarding tagged

SERVER_PRIVATE_LAN1 600 294912 Forwarding tagged

vm-vlan-101 101 294912 Forwarding tagged

vm-vlan-102 102 294912 Forwarding tagged

vm-vlan-103 103 294912 Forwarding tagged

vm-vlan-104 104 294912 Forwarding tagged

vm-vlan-105 105 294912 Forwarding tagged

Trunk port with Native VLAN

Native vlan configuration is per Juniper ELS stanza where the native vlan id is added to the physical interface and also the VLAN name is added to the Ethernet switching options on the logical interface.

{master:0}[edit]

user@lab-3.grnet.gr# show groups VIMA-LAB

interfaces {

<*> {

native-vlan-id 481;

mtu 9022;

ether-options {

auto-negotiation;

}

unit 0 {

family ethernet-switching {

interface-mode trunk;

vlan {

members [ PROVISIONING SERVER_PRIVATE_LAN1 vm-vlan-101 vm-vlan-102 vm-vlan-103 vm-vlan-104 vm-vlan-105 ];

}

}

}

}

}

Unfortunately in our tcpdumps we have seen that traffic exiting the interface was tagged with VLAN ID 481, where it should be left untagged.

The same does not apply when we configure the interface to allow for regular VLAN ids (no VXLAN encapsulation). Configuration under Ethernet switching options for the interface is exactly the same as with the VXLAN case, but the allowed VLANs are not configured with VXLAN encapsulation under VLAN database.

Aggregate Interface

In this scenario we have a BME that is multi-homed to two QFX5100 devices. Both QFX5100 must be able to send/receive traffic from the server, so both server links must be used. From the server side LACP is setup so nothing different so far. From the network side the active/active topology is handled by EVPN. Namely both QFX5100 (PE routers in our case) have the same Ethernet Segment Identifier configured as well as the all-active configuration.

Leaf-1 configuration with ESI that is globally unique across the EVPN domain:

user@lab-3.grnet.gr# show interfaces ae501

description VMC-05-01-AGR;

esi {

00:01:01:01:01:01:01:01:05:01;

all-active;

}

aggregated-ether-options {

lacp {

active;

system-id 00:00:00:01:05:01;

}

}

unit 0 {

family ethernet-switching {

interface-mode trunk;

vlan {

members PROVISIONING;

}

}

}

Leaf-2 configuration with (the same as leaf-1) ESI that is globally unique across the EVPN domain:

user@lab-4.grnet.gr# show interfaces ae501

description VMC-05-01-AGR;

esi {

00:01:01:01:01:01:01:01:05:01;

all-active;

}

aggregated-ether-options {

lacp {

active;

system-id 00:00:00:01:05:01;

}

}

unit 0 {

family ethernet-switching {

interface-mode trunk;

vlan {

members PROVISIONING;

}

}

}

Verify Aggregate Interfaces

Each Leaf device has exchanged LACP packets with the server and setup its aggregate interface:

{master:0}[edit]

user@lab-3.grnet.gr# run show lacp interfaces

Aggregated interface: ae501

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/0/25 Actor No No Yes Yes Yes Yes Fast Active

xe-0/0/25 Partner No No Yes Yes Yes Yes Slow Active

LACP protocol: Receive State Transmit State Mux State

xe-0/0/25 Current Slow periodic Collecting distributing

{master:0}[edit]

user@lab-3.grnet.gr# run show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 8 entries, 8 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING 00:00:0c:9f:f0:01 D vtep.32771 10.0.0.1

PROVISIONING 00:05:73:a0:00:01 D vtep.32771 10.0.0.1

PROVISIONING 64:a0:e7:42:ca:c1 D vtep.32771 10.0.0.1

PROVISIONING 64:a0:e7:42:dc:c1 D vtep.32771 10.0.0.1

PROVISIONING ec:f4:bb:ed:46:98 D vtep.32770 10.0.0.5

PROVISIONING ec:f4:bb:ed:4f:e8 D vtep.32772 10.0.0.6

PROVISIONING ec:f4:bb:ed:53:c8 DR ae501.0

PROVISIONING ec:f4:bb:ed:b7:e8 D vtep.32770 10.0.0.5

{master:0}[edit]

user@lab-4.grnet.gr# run show lacp interfaces

Aggregated interface: ae501

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/0/1 Actor No No Yes Yes Yes Yes Fast Active

xe-0/0/1 Partner No No Yes Yes Yes Yes Slow Active

LACP protocol: Receive State Transmit State Mux State

xe-0/0/1 Current Slow periodic Collecting distributing

{master:0}[edit]

user@lab-4.grnet.gr# run show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 8 entries, 8 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING 00:00:0c:9f:f0:01 D vtep.32771 10.0.0.1

PROVISIONING 00:05:73:a0:00:01 D vtep.32771 10.0.0.1

PROVISIONING 64:a0:e7:42:ca:c1 D vtep.32771 10.0.0.1

PROVISIONING 64:a0:e7:42:dc:c1 D vtep.32771 10.0.0.1

PROVISIONING ec:f4:bb:ed:46:98 D vtep.32770 xxx.yyy.zzz10.0.0.5

PROVISIONING ec:f4:bb:ed:4f:e8 D vtep.32772 xxx.yyy.zzz10.0.0.6

PROVISIONING ec:f4:bb:ed:53:c8 DL ae501.0

PROVISIONING ec:f4:bb:ed:b7:e8 D vtep.32770 xxx.yyy.zzz10.0.0.5

Verify Overlay Network

The EVPN Type 1 route that contains the new MAC address is send with vrf-target target:7777:7777:

{master:0}[edit]

user@lab-3.grnet.gr# show switch-options

vtep-source-interface lo0.0;

route-distinguisher xxx.yyy.zzz10.0.0.3:1;

vrf-import underlay-import;

vrf-target {

target:7777:7777;

auto;

}

Therefore over the overlay EVPN signaling the address is learned by the Spine switches:

{master:0}[edit]

user@lab-1.grnet.gr# run show route receive-protocol bgp xxx.yyy.zzz10.0.0.4

inet.0: 24 destinations, 31 routes (24 active, 0 holddown, 0 hidden)

inet.2: 1 destinations, 1 routes (1 active, 0 holddown, 0 hidden)

:vxlan.inet.0: 21 destinations, 21 routes (21 active, 0 holddown, 0 hidden)

inet6.0: 1 destinations, 1 routes (1 active, 0 holddown, 0 hidden)

bgp.evpn.0: 43 destinations, 195 routes (43 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

1:10.0.0.3:0::010101010101010501::FFFF:FFFF/304

10.0.0.3 65213 65212 I

1:10.0.0.3:1::010101010101010501::0/304

10.0.0.3 65213 65212 I

1:10.0.0.4:0::010101010101010501::FFFF:FFFF/304

* 10.0.0.4 65213 I

1:10.0.0.4:1::010101010101010501::0/304

* 10.0.0.4 65213 I

2:10.0.0.4:1::481::ec:f4:bb:ed:53:c8/304

* 10.0.0.4 65213 I

2:10.0.0.5:1::481::ec:f4:bb:ed:46:98/304

10.0.0.5 65213 65214 I

2:10.0.0.5:1::481::ec:f4:bb:ed:b7:e8/304

10.0.0.5 65213 65214 I

2:10.0.0.6:1::481::ec:f4:bb:ed:4f:e8/304

10.0.0.6 65213 65215 I

3:10.0.0.3:1::101::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::102::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::103::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::104::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::105::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::481::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::600::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.4:1::101::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::102::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::103::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::104::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::105::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::481::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::600::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.5:1::101::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::102::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::103::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::104::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::105::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::481::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::600::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.6:1::101::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::102::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::103::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::104::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::105::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::481::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::600::10.0.0.6/304

10.0.0.6 65213 65215 I

4:10.0.0.3:0::010101010101010501:10.0.0.3/304

10.0.0.3 65213 65212 I

4:10.0.0.4:0::010101010101010501:10.0.0.4/304

* 10.0.0.4 65213 I

default-switch.evpn.0: 41 destinations, 185 routes (41 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

1:10.0.0.3:0::010101010101010501::FFFF:FFFF/304

10.0.0.3 65213 65212 I

1:10.0.0.3:1::010101010101010501::0/304

10.0.0.3 65213 65212 I

1:10.0.0.4:0::010101010101010501::FFFF:FFFF/304

* 10.0.0.4 65213 I

1:10.0.0.4:1::010101010101010501::0/304

* 10.0.0.4 65213 I

2:10.0.0.4:1::481::ec:f4:bb:ed:53:c8/304

* 10.0.0.4 65213 I

2:10.0.0.5:1::481::ec:f4:bb:ed:46:98/304

10.0.0.5 65213 65214 I

2:10.0.0.5:1::481::ec:f4:bb:ed:b7:e8/304

10.0.0.5 65213 65214 I

2:10.0.0.6:1::481::ec:f4:bb:ed:4f:e8/304

10.0.0.6 65213 65215 I

3:10.0.0.3:1::101::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::102::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::103::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::104::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::105::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::481::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.3:1::600::10.0.0.3/304

10.0.0.3 65213 65212 I

3:10.0.0.4:1::101::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::102::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::103::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::104::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::105::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::481::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::600::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.5:1::101::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::102::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::103::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::104::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::105::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::481::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.5:1::600::10.0.0.5/304

10.0.0.5 65213 65214 I

3:10.0.0.6:1::101::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::102::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::103::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::104::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::105::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::481::10.0.0.6/304

10.0.0.6 65213 65215 I

3:10.0.0.6:1::600::10.0.0.6/304

10.0.0.6 65213 65215 I

Verify Ethernet Switching Table

Those add the new entry to their Ethernet switching table:

{master:0}[edit]

user@lab-1.grnet.gr# run show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 9 entries, 9 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING 00:00:0c:9f:f0:01 D xe-0/0/0.0

PROVISIONING 00:05:73:a0:00:01 D xe-0/0/0.0

PROVISIONING 0c:86:10:5a:80:7b D vtep.32768 10.0.0.3

PROVISIONING 64:a0:e7:42:ca:c1 D xe-0/0/0.0

PROVISIONING 64:a0:e7:42:dc:c1 D xe-0/0/0.0

PROVISIONING ec:f4:bb:ed:46:98 D vtep.32773 10.0.0.5

PROVISIONING ec:f4:bb:ed:4f:e8 D vtep.32776 10.0.0.6

PROVISIONING ec:f4:bb:ed:53:c8 DR esi.1786 00:01:01:01:01:01:01:01:05:01

PROVISIONING ec:f4:bb:ed:b7:e8 D vtep.32773 10.0.0.5

Verify DF/BDF status

Leaf-2 is also the designated forwarder for this ESI, while Leaf-1 is the backup:

{master:0}[edit]

user@lab-4.grnet.gr# show protocols evpn | match forward

designated-forwarder-election-hold-time 2;

{master:0}[edit]

user@lab-4.grnet.gr# run show evpn instance esi 00:01:01:01:01:01:01:01:05:01 backup-forwarder

Instance: default-switch

Number of ethernet segments: 1

ESI: 00:01:01:01:01:01:01:01:05:01

Backup forwarder: 10.0.0.3

{master:0}[edit]

user@lab-4.grnet.gr# run show evpn instance esi 00:01:01:01:01:01:01:01:05:01 designated-forwarder

Instance: default-switch

Number of ethernet segments: 1

ESI: 00:01:01:01:01:01:01:01:05:01

Designated forwarder: 10.0.0.4

Verification and Tests

Overlay

We first confirm that all loopbacks are visible through the overlay network

{master:0}[edit]

user@lab-3.grnet.gr# run op bgp-show-summary

Peer-address Description Peer-AS Flaps Is UP for Current State

10.0.0.1 spine-1 65210 2 1:10:28 Established

10.0.0.2 spine-2 65211 0 1:06:31 Established

10.0.0.4 leaf-2 65213 1 1:09:36 Established

10.0.0.5 leaf-3 65214 0 1:05:01 Established

10.0.0.6 leaf-4 65215 0 1:04:26 Established

192.168.0.0 lab1 - SPINE1 65110 0 1:11:45 Established

192.168.0.6 lab2 - SPINE2 65111 0 1:06:34 Established

{master:0}[edit]

user@lab-3.grnet.gr# run show route table inet.0 | match 10.0.0

10.0.0.1/32 *[BGP/170] 01:11:55, localpref 100

10.0.0.2/32 *[BGP/170] 01:06:44, localpref 100

10.0.0.3/32 *[Direct/0] 01:56:25

10.0.0.4/32 *[BGP/170] 01:09:49, localpref 100

10.0.0.5/32 *[BGP/170] 01:05:21, localpref 100, from 192.168.0.6

10.0.0.6/32 *[BGP/170] 01:04:44, localpref 100, from 192.168.0.0

And multipath is in effect:

{master:0}[edit]

user@lab-3.grnet.gr# run show route 10.0.0.5/32

inet.0: 24 destinations, 36 routes (24 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.0.0.5/32 *[BGP/170] 01:05:43, localpref 100, from 192.168.0.6

AS path: 65111 65114 I, validation-state: unverified

> to 192.168.0.0 via et-0/0/48.0

to 192.168.0.6 via et-0/0/49.0

[BGP/170] 01:05:41, localpref 100

AS path: 65110 65114 I, validation-state: unverified

> to 192.168.0.0 via et-0/0/48.0

{master:0}[edit]

user@lab-3.grnet.gr# run show route forwarding-table destination 10.0.0.5

Routing table: default.inet

Internet:

Destination Type RtRef Next hop Type Index NhRef Netif

10.0.0.5/32 user 1 ulst 131073 7

192.168.0.0 ucst 1794 14 et-0/0/48.0

192.168.0.6 ucst 1809 12 et-0/0/49.0

Underlay

EVPN type 2 and type 3 routes are advertised over BGP carrying the MAC addresses each device has in its Ethernet switching table:

{master:0}[edit]

user@lab-3.grnet.gr# run show route advertising-protocol bgp 10.0.0.1

bgp.evpn.0: 14 destinations, 54 routes (14 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

2:10.0.0.3:1::481::0c:86:10:5a:80:7b/304

* Self I

2:10.0.0.3:1::481::ec:f4:bb:ed:9b:18/304

* Self I

2:10.0.0.4:1::481::ec:f4:bb:ed:53:c8/304

* 10.0.0.4 65213 I

3:10.0.0.3:1::481::10.0.0.3/304

* Self I

3:10.0.0.4:1::481::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.4:1::600::10.0.0.4/304

* 10.0.0.4 65213 I

3:10.0.0.5:1::481::10.0.0.5/304

* 10.0.0.5 65214 I

3:10.0.0.5:1::600::10.0.0.5/304

* 10.0.0.5 65214 I

3:10.0.0.6:1::481::10.0.0.6/304

* 10.0.0.6 65215 I

3:10.0.0.6:1::600::10.0.0.6/304

* 10.0.0.6 65215 I

default-switch.evpn.0: 14 destinations, 54 routes (14 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

2:10.0.0.3:1::481::0c:86:10:5a:80:7b/304

* Self I

2:10.0.0.3:1::481::ec:f4:bb:ed:9b:18/304

* Self I

3:10.0.0.3:1::481::10.0.0.3/304

* Self I

{master:0}[edit]

user@lab-3.grnet.gr# run show route receive-protocol bgp 10.0.0.1

inet.0: 24 destinations, 36 routes (24 active, 0 holddown, 0 hidden)

inet.1: 3 destinations, 3 routes (3 active, 0 holddown, 0 hidden)

:vxlan.inet.0: 12 destinations, 12 routes (12 active, 0 holddown, 0 hidden)

inet6.0: 2 destinations, 2 routes (2 active, 0 holddown, 0 hidden)

inet6.1: 1 destinations, 1 routes (1 active, 0 holddown, 0 hidden)

bgp.evpn.0: 14 destinations, 54 routes (14 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

2:10.0.0.1:1::481::00:00:0c:07:ac:01/304

* 10.0.0.1 65210 I

2:10.0.0.1:1::481::64:a0:e7:42:ca:c1/304

* 10.0.0.1 65210 I

2:10.0.0.1:1::481::64:a0:e7:42:dc:c1/304

* 10.0.0.1 65210 I

2:10.0.0.4:1::481::ec:f4:bb:ed:53:c8/304

10.0.0.4 65210 65213 I

3:10.0.0.1:1::481::10.0.0.1/304

* 10.0.0.1 65210 I

3:10.0.0.4:1::481::10.0.0.4/304

10.0.0.4 65210 65213 I

3:10.0.0.4:1::600::10.0.0.4/304

10.0.0.4 65210 65213 I

3:10.0.0.5:1::481::10.0.0.5/304

10.0.0.5 65210 65214 I

3:10.0.0.5:1::600::10.0.0.5/304

10.0.0.5 65210 65214 I

3:10.0.0.6:1::481::10.0.0.6/304

10.0.0.6 65210 65215 I

3:10.0.0.6:1::600::10.0.0.6/304

10.0.0.6 65210 65215 I

default-switch.evpn.0: 14 destinations, 54 routes (14 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

2:10.0.0.1:1::481::00:00:0c:07:ac:01/304

* 10.0.0.1 65210 I

2:10.0.0.1:1::481::64:a0:e7:42:ca:c1/304

* 10.0.0.1 65210 I

2:10.0.0.1:1::481::64:a0:e7:42:dc:c1/304

* 10.0.0.1 65210 I

2:10.0.0.4:1::481::ec:f4:bb:ed:53:c8/304

10.0.0.4 65210 65213 I

3:10.0.0.1:1::481::10.0.0.1/304

* 10.0.0.1 65210 I

3:10.0.0.4:1::481::10.0.0.4/304

10.0.0.4 65210 65213 I

3:10.0.0.4:1::600::10.0.0.4/304

10.0.0.4 65210 65213 I

3:10.0.0.5:1::481::10.0.0.5/304

10.0.0.5 65210 65214 I

3:10.0.0.5:1::600::10.0.0.5/304

10.0.0.5 65210 65214 I

3:10.0.0.6:1::481::10.0.0.6/304

10.0.0.6 65210 65215 I

3:10.0.0.6:1::600::10.0.0.6/304

10.0.0.6 65210 65215 I

Switching Table

{master:0}[edit]

user@lab-3.grnet.gr# run show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 6 entries, 6 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING 00:00:0c:07:ac:01 D vtep.32769 10.0.0.1

PROVISIONING 0c:86:10:5a:80:7b D et-0/0/50.0

PROVISIONING 64:a0:e7:42:ca:c1 D vtep.32769 10.0.0.1

PROVISIONING 64:a0:e7:42:dc:c1 D vtep.32769 10.0.0.1

PROVISIONING ec:f4:bb:ed:53:c8 D vtep.32770 10.0.0.4

PROVISIONING ec:f4:bb:ed:9b:18 D xe-0/0/1.0

Which is actually replicated to the evpn database:

{master:0}[edit]

user@lab-3.grnet.gr# run show evpn database

Instance: default-switch

VLAN VNI MAC address Active source Timestamp IP address

481 00:00:0c:07:ac:01 10.0.0.1 Aug 26 11:14:22

481 0c:86:10:5a:80:7b et-0/0/50.0 Aug 26 10:40:51

481 64:a0:e7:42:ca:c1 10.0.0.1 Aug 26 11:14:22

481 64:a0:e7:42:dc:c1 10.0.0.1 Aug 26 11:14:22

481 ec:f4:bb:ed:46:98 10.0.0.6 Aug 26 15:06:23

481 ec:f4:bb:ed:53:c8 10.0.0.4 Aug 26 13:16:03

481 ec:f4:bb:ed:9b:18 xe-0/0/1.0 Aug 26 13:00:37

481 ec:f4:bb:ed:b7:e8 10.0.0.6 Aug 26 14:58:52

ICMP testing

The first test was to issue a simple ping from the data center router to an IP address that is configured behind lab-4:

# ping aaa.bbb.ccc.142

PING aaa.bbb.ccc.142 (aaa.bbb.ccc.142): 56 data bytes

64 bytes from aaa.bbb.ccc.142: icmp_seq=0 ttl=63 time=8.815 ms

64 bytes from aaa.bbb.ccc.142: icmp_seq=1 ttl=63 time=41.379 ms

64 bytes from aaa.bbb.ccc.142: icmp_seq=2 ttl=63 time=10.344 ms

64 bytes from aaa.bbb.ccc.142: icmp_seq=3 ttl=63 time=11.284 ms

64 bytes from aaa.bbb.ccc.142: icmp_seq=4 ttl=63 time=10.669 ms

--- aaa.bbb.ccc.142 ping statistics ---

5 packets transmitted, 5 packets received, 0.00% packet loss

round-trip min/avg/max = 8.815/16.498/41.379 ms

Server bond interface with Active/Backup

This test was conducted on one attached server where the active port is YELLOW that is connected to lab-4:

{master:0}[edit]

user@lab-4.grnet.gr# run show ethernet-switching table interface xe-0/0/25

MAC database for interface xe-0/0/25

MAC database for interface xe-0/0/25.0

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 9 entries, 9 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING ec:f4:bb:ed:9b:18 D xe-0/0/25.0

And the backup port is RED that is connected to qlab3:

{master:0}[edit]

user@lab-3.grnet.gr# run show ethernet-switching table interface xe-0/0/1

MAC database for interface xe-0/0/1

MAC database for interface xe-0/0/1.0

{master:0}[edit]

user@lab-3.grnet.gr# run show interfaces xe-0/0/1

Physical interface: xe-0/0/1, Enabled, Physical link is Up

Interface index: 1701, SNMP ifIndex: 513

The MAC address is advertised to the spine switches:

{master:0}

user@lab-1.grnet.gr> show route table bgp.evpn.0 evpn-mac-address ec:f4:bb:ed:9b:18

bgp.evpn.0: 44 destinations, 200 routes (44 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

2:10.0.0.4:1::481::ec:f4:bb:ed:9b:18/304

*[BGP/170] 4d 22:37:42, localpref 100, from 10.0.0.4

AS path: 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 4d 22:36:25, localpref 100, from 10.0.0.2

AS path: 65211 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 4d 22:36:17, localpref 100, from 10.0.0.3

AS path: 65212 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 4d 22:37:36, localpref 100, from 10.0.0.5

AS path: 65214 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 4d 22:37:41, localpref 100, from 10.0.0.6

AS path: 65215 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

Now let us switch the active port from server side to RED:

{master:0}[edit]

user@lab-3.grnet.gr# run show ethernet-switching table interface xe-0/0/1.0

MAC database for interface xe-0/0/1.0

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 9 entries, 9 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING ec:f4:bb:ed:9b:18 D xe-0/0/1.0

{master:0}[edit]

user@lab-4.grnet.gr# run show ethernet-switching table interface xe-0/0/25

MAC database for interface xe

{master:0}[edit]

user@lab-4.grnet.gr# run show ethernet-switching table interface xe-0/0/25

MAC database for interface xe-0/0/25

MAC database for interface xe-0/0/25.0

-0/0/25

MAC database for interface xe-0/0/25.0

The next step is to see if the control plane is working as expected, the server MAC should now be advertised by lab-3 to the spine switches, which seems to be the case:

{master:0}

user@lab-1.grnet.gr> show route table bgp.evpn.0 evpn-mac-address ec:f4:bb:ed:9b:18

bgp.evpn.0: 44 destinations, 200 routes (44 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

2:10.0.0.3:1::481::ec:f4:bb:ed:9b:18/304

*[BGP/170] 00:00:20, localpref 100, from 10.0.0.3

AS path: 65212 I, validation-state: unverified

> to 192.168.0.1 via et-0/0/48.0

[BGP/170] 00:00:20, localpref 100, from 10.0.0.2

AS path: 65211 65212 I, validation-state: unverified

> to 192.168.0.1 via et-0/0/48.0

[BGP/170] 00:00:20, localpref 100, from 10.0.0.4

AS path: 65213 65212 I, validation-state: unverified

> to 192.168.0.1 via et-0/0/48.0

[BGP/170] 00:00:20, localpref 100, from 10.0.0.5

AS path: 65214 65212 I, validation-state: unverified

> to 192.168.0.1 via et-0/0/48.0

[BGP/170] 00:00:20, localpref 100, from 10.0.0.6

AS path: 65215 65212 I, validation-state: unverified

> to 192.168.0.1 via et-0/0/48.0

The same test is now conducted from the switch side, meaning that the switch interface is administratively shut.

The control plane:

{master:0}

user@lab-1.grnet.gr> show route table bgp.evpn.0 evpn-mac-address ec:f4:bb:ed:9b:18

bgp.evpn.0: 44 destinations, 200 routes (44 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

2:10.0.0.4:1::481::ec:f4:bb:ed:9b:18/304

*[BGP/170] 00:00:08, localpref 100, from 10.0.0.4

AS path: 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 00:00:08, localpref 100, from 10.0.0.2

AS path: 65211 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 00:00:08, localpref 100, from 10.0.0.3

AS path: 65212 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 00:00:08, localpref 100, from 10.0.0.5

AS path: 65214 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

[BGP/170] 00:00:08, localpref 100, from 10.0.0.6

AS path: 65215 65213 I, validation-state: unverified

> to 192.168.0.3 via et-0/0/49.0

We observed minimum packet loss on all cases (1-3 packets) which is to be expected and more or less the same as with production traditional Ethernet architectures.

IP migration

In this test we will be configuring an IP address on one server and then move this address to another server. This is an experiment that also involves equipment that is not part of the lab since the Layer 3 is configured to the two Nexus switches due to the fact that Layer 3 VXLAN is not supported to QFX5100 boxes.

So again we rely on the control plane to notify the spine switches that the original mac address associated with the IP should be withdrawn and a new one will be advertised by another leaf switch to the spine switches.

Now all we need is a GARP from the server so that we do not have to wait for the arp timeout of the datacenter router.

The above was tested on both IPv4 and IPv6.

Traffic Load Balancing in the Backbone Links

The purpose of this test is to determine if what we are seeing in the control plane, with multipath enabled is verified with actual data traffic.

For this test we will be using server1 as the source of traffic and server2 as the destination. Now the local VTEP is encapsulating traffic that is arriving to interface xe-0/0/1 with VXLAN encapsulation and forwarding traffic to the remote VTEP which is on lab3:

{master:0}[edit]

user@lab-3.grnet.gr# run show interfaces descriptions | match 04-01

xe-0/0/1 up up VMC-04-01-RED

{master:0}[edit]

user@lab-3.grnet.gr# run show ethernet-switching table interface xe-0/0/1

MAC database for interface xe-0/0/1

MAC database for interface xe-0/0/1.0

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC)

Ethernet switching table : 8 entries, 8 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

PROVISIONING ec:f4:bb:ed:9b:18 D xe-0/0/1.0

{master:0}[edit]

user@lab-5.grnet.gr# run show ethernet-switching vxlan-tunnel-end-point remote mac-table | match ec:f4:bb:ed:9b:18

ec:f4:bb:ed:9b:18 D vtep.32771 10.0.0.3

lab5 already knows how to send traffic for the loopback interface of 3, that is the remote VTEP via the underlay network:

{master:0}[edit]

user@lab-5.grnet.gr# run show route 10.0.0.3/32

inet.0: 19 destinations, 28 routes (19 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.0.0.3/32 *[BGP/170] 6d 19:22:25, localpref 100, from 192.168.0.4

AS path: 65110 65112 I, validation-state: unverified

to 192.168.0.4 via et-0/0/48.0

> to 192.168.0.10 via et-0/0/49.0

[BGP/170] 6d 19:22:25, localpref 100

AS path: 65111 65112 I, validation-state: unverified

> to 192.168.0.10 via et-0/0/49.0

So all that is left is to run a couple of iperf clients from ycr0701 that will be sending traffic to ycr0401 and then monitor the backbone interfaces of lab3:

Servers’ side:

root@ycr0702:~# iperf -c aaa.bbb.ccc.132 -b 100M -u -t 30

WARNING: option -b implies udp testing

------------------------------------------------------------

Client connecting to aaa.bbb.ccc.132, UDP port 5001

Sending 1470 byte datagrams

UDP buffer size: 208 KByte (default)

------------------------------------------------------------

[ 3] local aaa.bbb.ccc.137 port 43561 connected with aaa.bbb.ccc.132 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-30.0 sec 359 MBytes 101 Mbits/sec

[ 3] Sent 256411 datagrams

[ 3] WARNING: did not receive ack of last datagram after 10 tries.

root@ycr0702:~#

^Croot@ycr0401:~# iperf -s -u

------------------------------------------------------------

Server listening on UDP port 5001

Receiving 1470 byte datagrams

UDP buffer size: 208 KByte (default)

------------------------------------------------------------

Network side:

{master:0}[edit]

user@lab-3.grnet.gr# run show interfaces descriptions | match lab

et-0/0/48 up up [lab3-lab1-1] Connection to lab-1:et-0/0/48

et-0/0/49 up up [lab3-lab2-1] Connection to lab-2:et-0/0/48

lab3 on interface connecting to spine switch1 (lab1):

lab-3.grnet.gr Seconds: 6 Time: 12:18:17

Delay: 0/0/0

Interface: et-0/0/48, Enabled, Link is Up

Encapsulation: Ethernet, Speed: 40000mbps

Traffic statistics: Current delta

Input bytes: 1215404568 (107067064 bps) [82897385]

Output bytes: 1300003838 (24096 bps) [14353]

Input packets: 3520838 (8554 pps) [53046]

Output packets: 5187269 (6 pps) [48]

Error statistics:

Input errors: 0 [0]

Input drops: 0 [0]

Input framing errors: 0 [0]

Policed discards: 0 [0]

L3 incompletes: 0 [0]

L2 channel errors: 0 [0]

L2 mismatch timeouts: 0 [0]

Carrier transitions: 1 [0]

Output errors: 0 [0]

Output drops: 0 [0]

Aged packets: 0 [0]

Active alarms : None

Active defects: None

Input MAC/Filter statistics:

Unicast packets 3498394 [53046]

Broadcast packets 1058 [0]

Multicast packets 21386 [0]

Oversized frames 0 [0]

Packet reject count 0 [0]

DA rejects 0 [0]

SA rejects 0 [0]

Output MAC/Filter Statistics:

Unicast packets 4854651 [43]

Broadcast packets 1096 [0]

Multicast packets 331561 [5]

Packet pad count 0 [0]

Packet error count 0 [0]

lab3 on the second interface connecting to spine switch2 (lab1):

lab-3.grnet.gr Seconds: 15 Time: 12:18:19

Delay: 0/0/1

Interface: et-0/0/49, Enabled, Link is Up

Encapsulation: Ethernet, Speed: 40000mbps

Traffic statistics: Current delta

Input bytes: 889289935 (107063728 bps) [185578027]

Output bytes: 648978307 (1496 bps) [6113]

Input packets: 3450060 (8549 pps) [118382]

Output packets: 3791806 (2 pps) [58]

Error statistics:

Input errors: 0 [0]

Input drops: 0 [0]

Input framing errors: 0 [0]

Policed discards: 0 [0]

L3 incompletes: 0 [0]

L2 channel errors: 0 [0]

L2 mismatch timeouts: 0 [0]

Carrier transitions: 1 [0]

Output errors: 0 [0]

Output drops: 0 [0]

Aged packets: 0 [0]

Active alarms : None

Active defects: None

Input MAC/Filter statistics:

Unicast packets 3427656 [118382]

Broadcast packets 1068 [0]

Multicast packets 21336 [0]

Oversized frames 0 [0]

Packet reject count 0 [0]

DA rejects 0 [0]

SA rejects 0 [0]

Output MAC/Filter Statistics:

Unicast packets 3459096 [51]

Broadcast packets 1081 [0]

Multicast packets 331629 [7]

Packet pad count 0 [0]

Packet error count 0 [0]

Bare Metal Server PXE Boot

We have setup 5 servers over PXE Boot in our PROVISIONING VLAN. Traffic path was as depicted in our topology, Layer3 was configured on the Data Center routers that where responsible for relayed DCHP discover to the DHCP server. All 8 servers where successfully setup in a ViMa cluster over EVPN/VXLAN lab.

Servers:

- server-rack0401.yp3.grnet.gr

- server-rack0402.yp3.grnet.gr

- server-rack0501.yp3.grnet.gr

- server-rack0502.yp3.grnet.gr

- server-rack0701.yp3.grnet.gr

- server-rack0702.yp3.grnet.gr

- server-rack0801.yp3.grnet.gr

- server-rack0802.yp3.grnet.gr

Conclusions

This lab has been about providing IP connectivity for a data center by running a virtual overlay network. Everything we have tested so far seems to indicate that this is a viable solution based on the current equipment. There are some limitations due to the specific hardware that we were not able to test (like redundant GW for Layer-3 termination on our LAB devices) and some others that are attributed to software bugs (native VLAN not working as expected) but in overall we were able to provision and build a ViMa cluster over this topology and do some extensive testing. Finally this design introduces some new challenges, like the management overhead that is significantly higher since the number of devices is much larger than other implementations making this solution a little less plug and play.

References:

- http://www.juniper.net/techpubs/en_US/junos14.1/information-products/pathway-pages/junos-sdn/evpn-vxlan.pdf

- http://www.juniper.net/techpubs/en_US/junos15.1/topics/reference/configuration-statement/multicast-mode-evpn-edit-routing-instances.html

- http://www.juniper.net/techpubs/en_US/junos15.1/topics/example/evpn-vxlan-irb-within-data-center.html#jd0e687

- http://www.juniper.net/assets/us/en/local/pdf/whitepapers/2000606-en.pdf

- http://www.juniper.net/documentation/en_US/junos15.1/topics/example/evpn-vxlan-mx-qfx-configuring.html#jd0e78

- https://tools.ietf.org/html/draft-ietf-bess-evpn-overlay-04